How to Set Up Nvidia GPU-Enabled Deep Learning Development Environment with Python, Keras and TensorFlow

How to Use GPU in notebook for training neural Network? | Data Science and Machine Learning | Kaggle

GitHub - zia207/Deep-Neural-Network-with-keras-Python-Satellite-Image-Classification: Deep Neural Network with keras(TensorFlow GPU backend) Python: Satellite-Image Classification

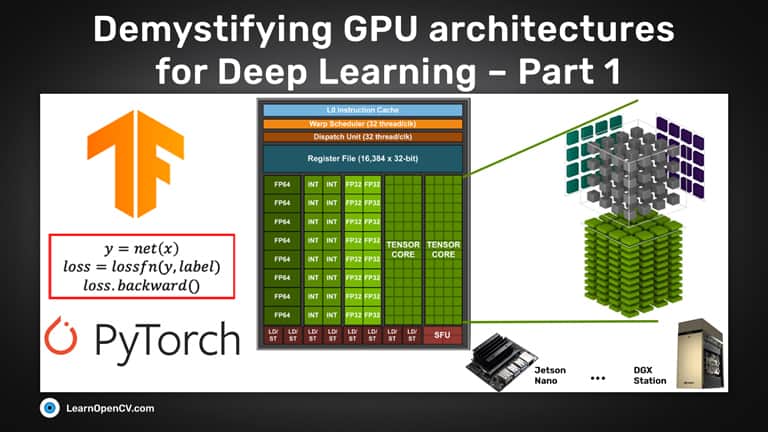

Training Deep Neural Networks on a GPU | Deep Learning with PyTorch: Zero to GANs | Part 3 of 6 - YouTube

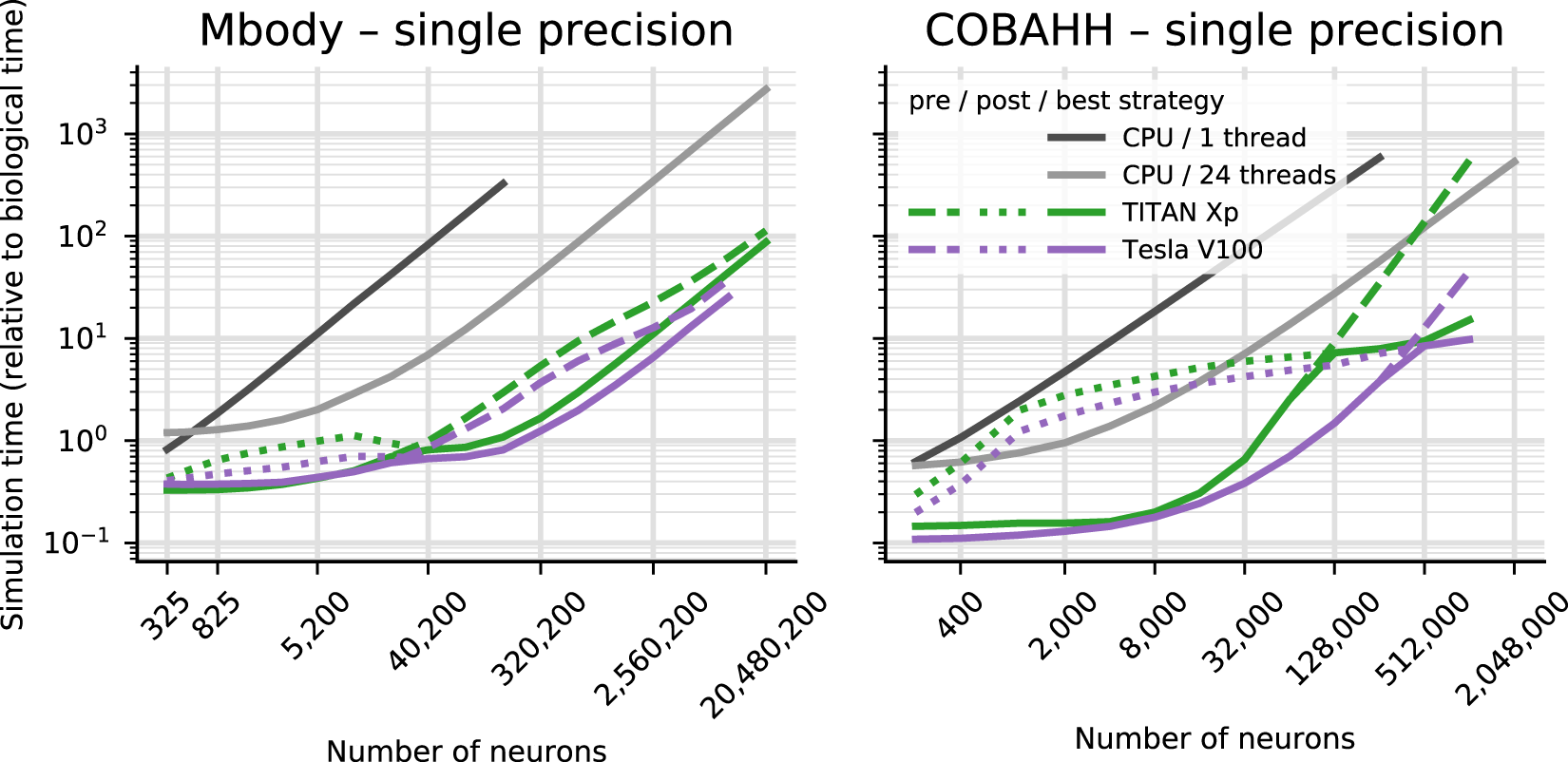

Brian2GeNN: accelerating spiking neural network simulations with graphics hardware | Scientific Reports

GitHub - pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration

GitHub - zylo117/pytorch-gpu-macosx: Tensors and Dynamic neural networks in Python with strong GPU acceleration. Adapted to MAC OSX with Nvidia CUDA GPU supports.

Parallelizing across multiple CPU/GPUs to speed up deep learning inference at the edge | AWS Machine Learning Blog