Apple MacBook Pro 16.2`` Chip M1 Max con CPU de 10 nucleos 64GB de memoria unificada 1TB SSD Grafica GPU de 32 nucleos y Neural Engine de 16 nucleos Pantalla Liquid Retina

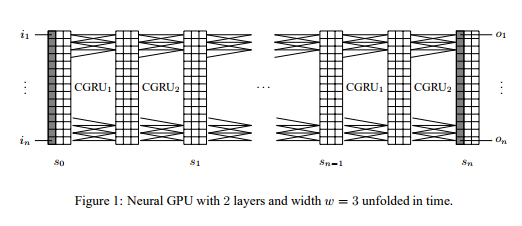

PARsE | Education | GPU Cluster | Efficient mapping of the training of Convolutional Neural Networks to a CUDA-based cluster

If I'm building a deep learning neural network with a lot of computing power to learn, do I need more memory, CPU or GPU? - Quora

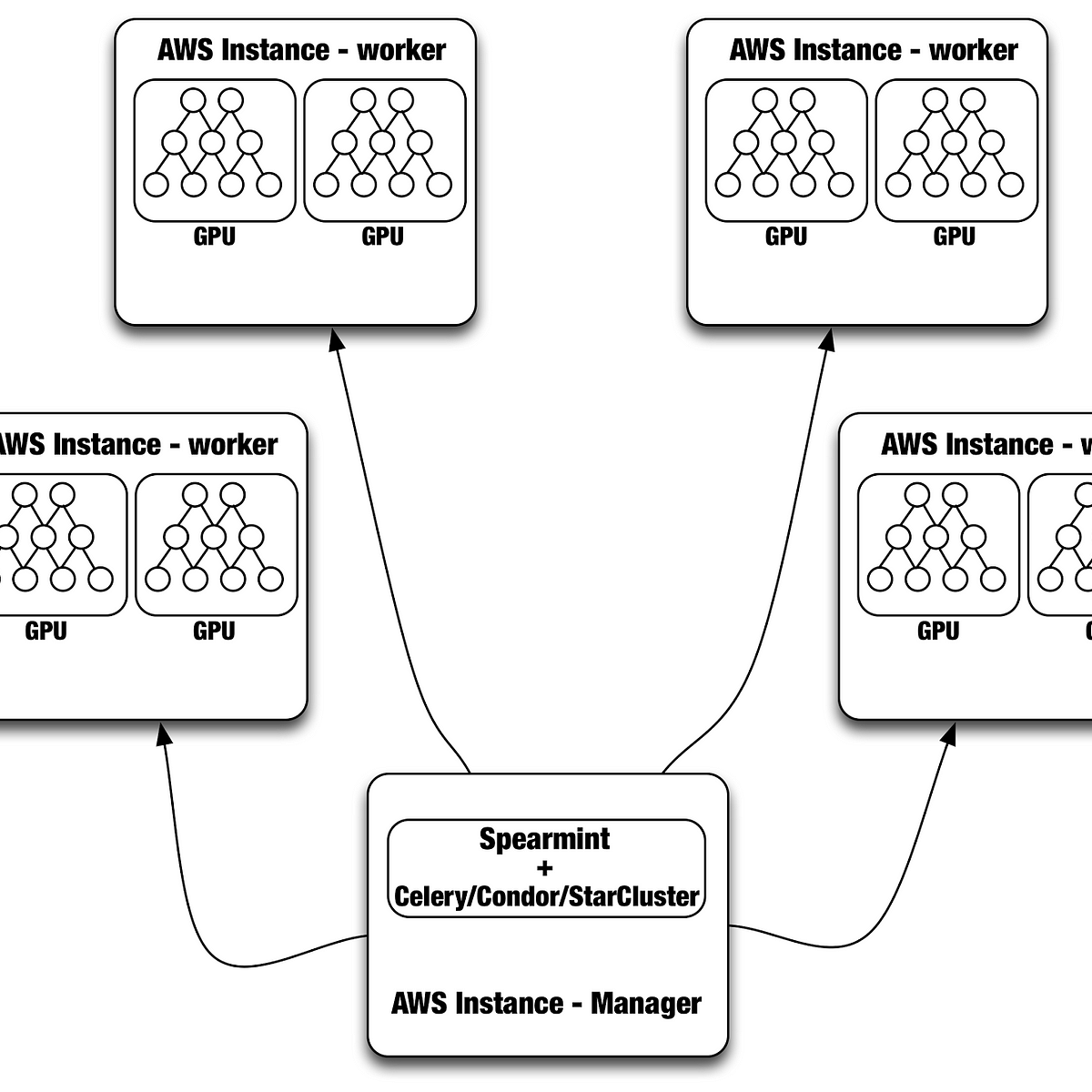

Distributed Neural Networks with GPUs in the AWS Cloud | by Netflix Technology Blog | Netflix TechBlog